|

|

|||

| forums: groups: | |||

|

"Doing great things with AI... No. Really."

So, I don't buy into the whole AI will take all the jobs and replace the real world. It's another big bubble like 3D printing, the metaverse, self driving cars, and, well, moveable type, that will deflate quite a bit and leave us with useful tools. I use AI (well, machine learning because it's not thinking for itself) everyday for photo editing and regularly for upscaling older videos. It's nothing to be scared of. I like the idea of image generation for coming up with ideas, storyboarding and stuff like that.

It's also fun to fuck around with. With a lot of help from forum user messg, I've been playing around. This is some of the better stuff I've come up with using his experimental WAM training models.

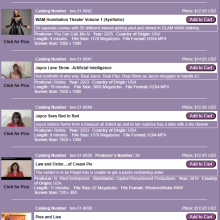

If you're interested at all, go Forum>Groups>AI WAM. A bunch of people are having some fun there and pushing things forward. If you're cynical, well, go spend some (more) money buying producers content -- that's how the real stuff gets made.

It's also fun to fuck around with. With a lot of help from forum user messg, I've been playing around. This is some of the better stuff I've come up with using his experimental WAM training models.

If you're interested at all, go Forum>Groups>AI WAM. A bunch of people are having some fun there and pushing things forward. If you're cynical, well, go spend some (more) money buying producers content -- that's how the real stuff gets made.

noise said: So, I don't buy into the whole AI will take all the jobs and replace the real world. It's another big bubble like 3D printing, the metaverse, self driving cars, and, well, moveable type, that will deflate quite a bit and leave us with useful tools. I use AI (well, machine learning because it's not thinking for itself) everyday for photo editing and regularly for upscaling older videos. It's nothing to be scared of. I like the idea of image generation for coming up with ideas, storyboarding and stuff like that.

It's also fun to fuck around with. With a lot of help from forum user messg, I've been playing around. This is some of the better stuff I've come up with using his experimental WAM training models.

If you're interested at all, go Forum>Groups>AI WAM. A bunch of people are having some fun there and pushing things forward. If you're cynical, well, go spend some (more) money buying producers content -- that's how the real stuff gets made.

It's also fun to fuck around with. With a lot of help from forum user messg, I've been playing around. This is some of the better stuff I've come up with using his experimental WAM training models.

If you're interested at all, go Forum>Groups>AI WAM. A bunch of people are having some fun there and pushing things forward. If you're cynical, well, go spend some (more) money buying producers content -- that's how the real stuff gets made.

So AI imaging for me is both a curiosity and a learning experience. Over the last year, the progression of Dalle3 and other public models has been off the charts, however guardrails implemented by OpenAI, MS and others have severely limited the potential. (These models are way more flexible than what they allow currently)

Thankfully, opensource source models such as Stable diffusion and flux have evolved significantly over the same time period.

I'm now in the position that I can train these models. My focus has been on learning, seeing what the models are capable of, what the boundaries are etc. They not cheap to train, at least properly. $20-$60 each and take 5-10 days but the results are impressive. They're not perfect but I'll continue to chip away at the issues. My goal is to create a general purpose model incorporating multiple substances and concepts that can be used by people locally and online. The focus is on high quality output with the flexibility to interpret most imagined scenes rather than the frankly quite poor outputs of Dalle3 and flux as it stands right now.

Is it a disruptive technology? Absolutely but I don't see it replacing existing artists and creators. It's not a substitute for real scenes.

Just highlighting this...

messg said: My goal is to create a general purpose model incorporating multiple substances and concepts that can be used by people locally and online.

I have to say I go take a dab at the AI forum every couple weeks.. and I've been constantly impressed by the leap of progress we have seen in the last 18months!! Cudos to messg and others that have the knowledge and ressource to make our WAM niche follow the progress train.

Cheers!

Cheers!

I'd been thinking of posting "Why I like WAM AI" myself: I can imagine things I am unlikely to see from commercial producers, even if I was to purchase a custom.

I get much more turned on by real women. If you took a sexy woman in a lowcut dress wearing long gloves, soaked her and then hit her with a gooey pie, the mess running into her cleavage, I'd be over the moon.

And if you think about most of the really great mainstream piefights, each victim gets one or two pies and then its on to another character. If there are multiple hits on the same person, she is doing something different each time: Natalie Wood looking triumphant after throwing her first pie, then later blinded and spinning around to hit Tony Curtis; the girl in a Stooge short saying, "Somebody give me a pie" after being pied during the "Lion FIght" speech, and so on. No WAM producer could afford that. (Well, OK, MG did that in Great Race.)

And if some of my AI victims happen to look like girls who wouldn't go out with me or give me the time of day...

I get much more turned on by real women. If you took a sexy woman in a lowcut dress wearing long gloves, soaked her and then hit her with a gooey pie, the mess running into her cleavage, I'd be over the moon.

And if you think about most of the really great mainstream piefights, each victim gets one or two pies and then its on to another character. If there are multiple hits on the same person, she is doing something different each time: Natalie Wood looking triumphant after throwing her first pie, then later blinded and spinning around to hit Tony Curtis; the girl in a Stooge short saying, "Somebody give me a pie" after being pied during the "Lion FIght" speech, and so on. No WAM producer could afford that. (Well, OK, MG did that in Great Race.)

And if some of my AI victims happen to look like girls who wouldn't go out with me or give me the time of day...

Now, imagine these pictures not as pictures but rather a storyboard for full on video in which you can input the dialogue you want, the movement you want and it will look just as real as anything that can be produced currently. Producers won't like it from a financial context which is understandable. But it's coming and eventually will be mostly indistinguishable from anything currently being offered. Of course that opens up a can of WAMworms regarding custom i.e.paying X for a WAM custom or X for a synthetic custom.

You can call me,

Al

You can call me,

Al

PhotoSlop said: Now, imagine these pictures not as pictures but rather a storyboard for full on video in which you can input the dialogue you want, the movement you want and it will look just as real as anything that can be produced currently. Producers won't like it from a financial context which is understandable. But it's coming and eventually will be mostly indistinguishable from anything currently being offered. Of course that opens up a can of WAMworms regarding custom i.e.paying X for a WAM custom or X for a synthetic custom.

You can call me,

Al

You can call me,

Al

Assuming this sci-fi fantasy comes true, producers will love it if they learn how to use it themselves. You only have a handful of WAM enthusiasts right now willing to learn AI image generation enough to consistently bang out decent-but-limited messy portraits. Assuming the learning curve for realistic video and audio will be even more steep, the number of people who can do it at a satisfying level will be tiny. And if they have two brain cells to rub together, that skill will be just as expensive as making a real video. Capitalism gon' capitalism.

The only people really getting screwed over are the human models, which sucks. But even then, they could theoretically create AI avatars of themselves and never have to worry again about showering chocolate pudding out of their hair.

Check out Project 20M on IG! @Project20M

Assuming this sci-fi fantasy comes true, producers will love it if they learn how to use it themselves. You only have a handful of WAM enthusiasts right now willing to learn AI image generation enough to consistently bang out decent-but-limited messy portraits. Assuming the learning curve for realistic video and audio will be even more steep, the number of people who can do it at a satisfying level will be tiny. And if they have two brain cells to rub together, that skill will be just as expensive as making a real video. Capitalism gon' capitalism.

The only people really getting screwed over are the human models, which sucks. But even then, they could theoretically create AI avatars of themselves and never have to worry again about showering chocolate pudding out of their hair.

This right here is the the missing piece of the puzzle as far as I can see. Propriety models such as Dalle3 can and have produced amazing unrestricted content but over the last year, the line has been moved over and over in terms of content filtering. I don't believe for one minute this is going to be more relaxed in the future as the opportunity for abuse is there (celebrities, NSFW content, underage etc) No firm wants to carry the risk that general model brings.

What has happened over the last year is the technology for imaging has evolved quickly so that you can train models pretty well locally and with cloud resourcing. This will improve incredibly over the next year.

The resourcing for video as far as I can see is lagging behind probably about 18-24 months in terms of resources and technology but it is moving too. I've started playing around with training video models rather than just using cloud generative services. It will come but it's at least another generation or 2 of hardware.

The possibilities are endless though. I already can train effective models using a single likeness if I wanted. I can introduce new concepts that the original model doesn't possess. Want a gunge tank, sure that can be added. Want specific bondage poses with the model sitting in a gunge tank wearing a specific outfit. Yup.. that can be trained too. But this all comes with a cost. A good likeness model will take several days to train.

There was a quote I read a while back which I think holds very true.. "If you want to train an ok image model, that's relatively simple. If you want to train a good model, that's going to cost you £££, time and a lot of effort"

btw, audio can be trained pretty accurately with 90-120 seconds of voice recordings now.

If I was a producer, I'd be asking myself what can I do now to capitalize on this in 6 months, 2 years etc.

PhotoSlop said: WAMworms

That one word stopped me in my tracks!

I have specific wam fantasies in my head that I have wanted to see for a very long while. People have said I should just get customs done, but the cost would be astronomical. For example, a flooded food factory with deep caramel everywhere. A mudslide wiping out a small city and catching young women unawares, with no injuries of course.

If I wanted to see a basic pie in the face I could get a custom done or just watch existing content. But if I want to flood a city with mud, or fill a factory with caramel, I can use AI.

Here are a few of my wilder scenarios such as what I mentioned . . .

I have a hard time buying that generative AI will replicate what is most important about WAM content - authentic human behavior in the heavily nuanced world of erotica - anytime soon.

What it's good at is visualizing scenarios that would be impossible or impractical in real life, like a big gameshow with an audience or a contrived WAM fest at a formal event, etc. It has similar value to written fiction in that regard. Perhaps the value of AI to producers is some sort of hybrid reality, where instead of a model in a gunge tank in a spare room of the producer's house, we have a model in a gunge tank on stage surrounded by an audience.

What it's good at is visualizing scenarios that would be impossible or impractical in real life, like a big gameshow with an audience or a contrived WAM fest at a formal event, etc. It has similar value to written fiction in that regard. Perhaps the value of AI to producers is some sort of hybrid reality, where instead of a model in a gunge tank in a spare room of the producer's house, we have a model in a gunge tank on stage surrounded by an audience.

One of the best things about AI Wam is that it gives you the opportunity to make images that feature clothing that usually never appears in WAM cos it's too niche of an interest. If for example you're like me, or one of the approximately 4 or 5 people I've run into on the internet who really like seeing formally dressed women wearing ties, and also really like WAM, then AI is an opportunity to create images that otherwise shouldn't exist, and to combine two very different kinks that never usually overlap. Sure, you can pay for customs, but customs can be very hit or miss, and AI can be refined endlessly.

The real thing is always better, and I never stop thinking of new ideas for customs, but years of mostly having to use your imagination really does come in handy when it comes to this type of thing, and AI is a great outlet for that.

The real thing is always better, and I never stop thinking of new ideas for customs, but years of mostly having to use your imagination really does come in handy when it comes to this type of thing, and AI is a great outlet for that.

xman10 said: I have a hard time buying that generative AI will replicate what is most important about WAM content - authentic human behavior in the heavily nuanced world of erotica - anytime soon.

That's one of the interesting things about AI: It can already create images that are indistinguishable from reality. Not every time, of course. It's still pretty rare without actual photo editing skills to fix the errors, but I myself have made a number of images that you need to look VERY closely at to even suspect they might be AI. Without knowing they were made with AI, most people wouldn't even question them. Especially in the context of erotica, it's often not necessarily the image itself that is erotic, but the viewer's imagination of the events surrounding the image (generally speaking, what will happen after the photo was taken).

My whole life, I've never had much extra cash sitting around, especially when I was younger. My parents couldn't afford to give me an allowance, and when I was old enough to work, most of that money went to necessities. So when it came to WAM, I had to be content with the teaser images and freebies and single magazine photos. I got used to only having one or two images of any given model or scenario and imagining the rest.

With AI, it's no different. There are a lot of people like me who can get turned on by a single image or a slideshow of many different models in different situations, and don't need a video or a whole series of images of the same model acting out a scene.

I think that's part of the draw of AI for me in the first place: I just need a starting image and my imagination will take care of the rest. I will spend literally hours tweaking and testing prompts (OCD sucks, btw) until I finally get the perfect image, or a perfect image I wasn't even intending to make.

While I understand the idea that AI can't replace authentic human behavior, it already can. I've seen many, many people fooled by AI images who loved the images until they found out they were made with AI. I've even seen anti-AI people argue that the image can't possibly be AI because they refuse to believe that they could be fooled by a computer.

We're already at the cusp of AI replicating reality. Granted, you won't see that as much here because most of us dabbling in AI aren't skilled enough to create those perfect images consistently, but as the technology's ease of use improves, so will the stuff we dabblers can create.

My point is, it won't be too long before the line between AI and real images will be so thin that the "Synthetic" tag will be the only way to tell the difference.

xman10 said: I have a hard time buying that generative AI will replicate what is most important about WAM content - authentic human behavior in the heavily nuanced world of erotica - anytime soon.

This.

If anyone doubts it, name a book, TV show, movie that accurately imitates human speech patterns or behavior.

Right. Some reality TV occasionally or gameshows, which is part of the draw, but even talk shows are so preprepared.

Sponsors

To avoid content being blocked due to your local laws, please verify your age ?

Sponsors

Design & Code ©1998-2026 Loverbuns, LLC 18 U.S.C. 2257 Record-Keeping Requirements Compliance Statement

Epoch Billing Support Log In

Love you, too

Love you, too

VIP Coupons

VIP Coupons